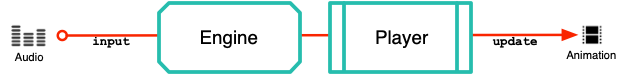

Engines and Players

SG Com has two main components: Engines and Players.

The Engine does the main processing work: it converts an incoming audio stream into synchronous facial animation. Output animation includes lip sync, full-face emotional expressions, blinks, eye darts, and even head movement, all driven by the speaker's voice through Speech Graphics algorithms. There is a small processing latency (50 milliseconds) between audio input and animation output. An Engine has one audio source and generates animation for one character. See SG Com Input regarding input audio.

Engine output must be streamed to a Player for buffering, decoding and playback. The character's face is animated by incrementing the current time in the Player and updating the scene based on the animation values obtained. Animation values are automatically interpolated based on the times requested.

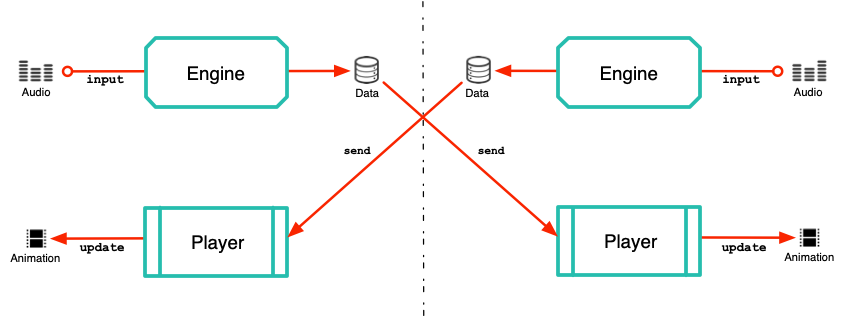

Networked vs local use cases

The input voice may be used to drive an animated character either locally or remotely over a network. In the local use case, both Engine and Player are on the same machine:

In the remote use case, one may run audio through the Engine locally, but then send the Engine output (and audio) to remote Players for buffering and output. Below is a depiction of a two-way conversation over a network, in which each side has a local Engine for processing input audio, and a Player for output of the other participant's animation.

SG Com does not perform network operations. Data must be transmitted by the application. To get the benefit of SG Com within a fully networked, end-to-end service, see Rapport.cloud.

Engine idle

SG Com does not require sound in order to continue generating behavior on the target character. There can be breaks in the audio input, and the engine can continue turning over, generating nonverbal behavior and responding to behavior controls. This is called idling. Note that idling adds output that has no corresponding audio input, which must be taken into account when synchronizing output of audio and animation .

There are two ways to generate idle behavior: by running an engine with no audio input, or by inputting zeros for the audio. See Idle mode in the SG Com API Tutorial.

Synchronizing output

In order to synchronize audio and animation output, it’s important to keep in mind the following:

SG Com latency. After an audio sample is input into the Engine, there is a 50 millisecond delay until the corresponding output packet of animation is produced. This represents a perceptible asynchrony. Therefore, audio received from the microphone or other source cannot be immediately output at the same time it is input to the Engine. It must be output in synchrony with the animation.

Time is audio-based. The animation playback loop involves passing time values to the Player to retrieve animation frames. The time domain of the Player is based on the audio that went into the Engine (plus any idle ticks). Therefore, to keep animation synchronized with audio, you must know the time stamp of the audio, correcting for any lost audio data. Time 0 corresponds to the very first frame of audio that was input into the Engine.

Metadata updates

In addition to generating real-time animation, the SG Com Engine also generates updates from its internal algorithms, which can be used externally to inform other processes. At present, SG Com generates updates for these kinds of changes:

Change in behavior mode

Change in facial expression

Change in detected vocal activity

In each case, the notification is accompanied by a text payload describing the new state (e.g., the new behavior mode).